We've been hard at work building out support for a more native experience with Vision Pro for Secret Chest. Along the way I realized how familiar a lot f this felt. So I set out to write a little article on scanning physical objects so they could be used in augmented reality apps on Vision Pro. Scope creep...

I got into programming in the 1980s. A lot happened in the years since - much of which I didn’t notice until I needed to. Some of it I picked up on over the years, and as a history nerd, I thought I’d expand the scope of this little article to document how we got where we are as well. Skip to later parts if you’re not interested in those bits, but as someone who wrote a 2,000 page book on the history of computers and has been making computer graphics and 3d printing for years, I find it all faaascinating (and knowing the past helps us future-proof our projects).

Part 1: Early Graphics History

As Neal Stephenson once said, In the beginning was the command line. Unix was created at Bell Labs in the mid-1960s. That was made possible, in part, because of the advent of interactive computers at MIT. Some of the earliest transistorized computing projects at MIT were run on the Lincoln TX-2 computer they pioneered. Ken Olsen left MIT to found Digital Equipment Corporation, in part based on his experiences when helping to creating the TX-2 (loosely based on the Whirlwind project, which pioneered use of the light gun to interact with objects).

The team at MIT attempted to build an early operating system called Multics, which ran on a GE mainframe. Around the same time, Ivan Sutherland wrote a little program called Sketchpad for that TX-2, developed in 1963 for his PhD thesis. That is considered one of the most influential programs ever written by an individual and laid the foundation for several key areas in computer science, including:

Human-Computer Interaction (HCI): It introduced a novel way for users to interact with computers directly through a graphical interface, using a light pen to draw on the screen. This paved the way for modern graphical user interfaces (GUIs) that we use today with windows on screens, icons, and menus.

Computer Graphics: Sketchpad introduced functionalities like real-time drawing, manipulation, and editing of graphics on a computer screen, marking a significant step forward in the field.

Computer-Aided Design (CAD): The ability to draw and modify designs digitally with Sketchpad laid the groundwork for the development of sophisticated CAD software used extensively in various engineering and design disciplines.

Unlike the text-based interfaces prevalent at the time, Sketchpad allowed users to draw shapes and lines directly on the screen using a light pen. It introduced an early form of object-oriented programming, where elements like lines and circles were treated as objects with specific properties and behaviors. Sketchpad allowed users to define relationships between different parts of the drawing, enabling automatic updates when changes were made. For instance, modifying the dimensions of a rectangle would automatically adjust connected lines.

Two years after Sketchpad was introduced, Sutherland wrote the 1965 paper, "The Ultimate Display," where he proposed a system that could completely immerse a user in a computer-generated environment and built a prototype, the forerunner to the modern headsets in use today. When Sutherland became a professor, he taught other influential computer scientists, like founders from Adobe, Pixar, and Silicon Graphics. As well as academics who developed anti-aliasing, the first object-oriented programming languages, shading for computer graphics, and the very first 3d rendering of computer graphics. Ivan Sutherland's work with Sketchpad earned him the prestigious Turing Award in 1988, a testament to its lasting impact. By 1988, the personal computer revolution had come and graphics had matured along with the pervasiveness of computers in homes and organizations around the world. They were used for business and for fun.

Mystery House was a game introduced on the Apple II in 1980, written by Roberta and Ken Williams. It was one of the earliest examples of a graphical adventure game. While text-based adventures existed before, "Mystery House" introduced basic visuals and a point-and-click interface, paving the way for future graphic adventure titles like another Roberta Williams game, King's Quest, and the influential Sierra adventure games. It laid the groundwork for core mechanics typical of the genre like the ability to navigate virtual environments, interacting with objects and characters graphically. Its success encouraged the creation of more sophisticated games with richer narratives, improved graphics, and deeper puzzle mechanics.

King's Quest was an early attempt at a 3d game that used various techniques to create the illusion of a 3d world. The game primarily relied on pre-rendered 2D images for the backgrounds. These detailed scenes created the illusion of depth and a fixed perspective. Characters and objects were represented by 2D sprites, essentially small images that could be animated to simulate movement. It also employed forced perspective techniques to create the impression of depth. Objects farther away were drawn smaller, mimicking how objects appear smaller in real life as the distance increases. Sprites were scaled up or down depending on their position on the screen, further enhancing the illusion of depth.

King’s Quest also added a technique known as parallax scrolling to create a sense of movement. Background elements scrolled at different speeds compared to foreground objects, mimicking how distant objects appear to move slower than closer objects when observing a scene from a moving viewpoint. Imagine a stage with a painted backdrop depicting a landscape (pre-rendered background). Actors (2D sprites) move across a platform in the foreground, their size adjusted based on their position (scaling). The backdrop itself might move slightly slower than the actors to create a subtle depth effect (parallax scrolling). As technology advanced, games gradually transitioned towards true 3D graphics capabilities. Later titles in the King's Quest series incorporated 3D models and environments, offering a more immersive visual experience.

Other programs had introduced different techniques to get 3d graphics on a computer. 3D Art Graphics was developed by Kazumasa Mitazawa in 1978 for the Apple II and added the ability for users to define and render simple 3D objects like spheres and cubes as well as limited photorealistic visuals as a step towards true 3D representation on personal computers. Maze War was written in 1973 by Steve Colley and added wireframe models and first-person movement in a 3d perspective. Programs like Autodesk 3D Studio (1990) and Softimage (1986) marked significant milestones in providing comprehensive 3D modeling and animation functionalities.

An influential tool in creating 3d graphics is Blender. Dutch software developer Ton Roosendaal initially developed an earlier graphics application called Traces, for the Amiga platform from 1987-1991. Blender itself was officially launched in 1994, as an in-house application by NeoGeo, the animation studio he co-founded. In 2002, facing financial difficulties, NeoGeo initiated the "Free Blender" campaign, aiming to raise funds to open-source the software and has since transitioned into a community-driven development model. The fact that it’s free has led to it being widely used to create 3d models. 20 years later, even I was able to build models, as seen on my Thingiverse page at https://www.thingiverse.com/thing:5399776. Thingiverse being a great place where people can share and download 3d models, primarily used to 3d print things.

Part 2: From Virtual To Physical

The style of 3d printing mostly done today has been around since the early 1980s. Chuck Hull and Dr Hideo Kodama each patented a technique in 1980 and 1981 respectively. A third company, Stratasys, developed Fused Deposit Modeling, or FDM and patented that in 1989. The FDM patent expired in 2009. In 2005, a few years before the FDM patent expired, Dr. Adrian Bowyer started a project to bring inexpensive 3D printers to labs and homes around the world. That project evolved into what we now call the Replicating Rapid Prototyper, or RepRap for short.

RepRap evolved into an open source concept to create self-replicating 3D printers and by 2008, the Darwin printer was the first to use RepRap. As a community started to form, more collaborators designed more parts. Some were custom parts to improve the performance of the printer, or replicate the printer to become other printers. Others held the computing mechanisms in place. Some even wrote code to make the printer able to boot off a MicroSD card and then added a network interface so files could be uploaded to the printer wirelessly.

There was a rising tide of printers. People read about what 3D printers were doing and wanted to get involved. There was also a movement in the maker space, so people wanted to make things themselves. There was a craft to it. Part of that was wanting to share. Whether that was at a maker space or share ideas and plans and code online. Like the RepRap team had done.

One of those maker spaces was NYC Resistor, founded in 2007. Bre Pettis, Adam Mayer, and Zach Smith from there took some of the work from the RepRap project and had ideas for a few new projects they’d like to start. One was Thingiverse, which Zach Smith created. Bre Pettis joined in and they allowed users to upload .stl files and trade them, now that those were the standard for creating models for 3d printing. It’s now the largest site for trading hundreds of thousands of designs to print about anything imaginable. Well, everything except guns.

Then comes 2009. The patent for FDM expires and a number of companies respond by launching printers and services. Almost overnight the price for a 3D printer fell from $10,000 to $1,000 and continued to drop until printers like Creality could be purchased for $100. The market was flooded with cheap printers - and yet developers like Prusa and Bambu aim to build better experiences for users at higher price points. There are also really high end printers that can cost hundreds of thousands of dollars. But most FDM and resin printers use that stl file, which paved the way for printing everything from teeth to fidget spinners.

The stl format was initially developed by Albert Consulting Group in the 1980s for 3d Systems and Chuck Hull, who helped develop the early SLA printing technique and helped spec it out. Unlike other formats that might store additional information like color or texture, STL focuses on the shape and surface of the model, representing 3D objects as a collection of triangles. These small, flat shapes come together to form the overall surface of the model and are commonly referred to as triangle meshes. Each triangle in an STL file has an associated normal vector, indicating the outward direction of the triangle's surface. This information is crucial for proper rendering and 3D printing.

STL files can exist in either text-based or binary format. Text-based files are human-readable but larger in size, while binary files are smaller but require specific software to interpret. Since STL focuses solely on surface geometry, intricate details or curves within the model might be lost during the conversion process from a higher-quality format to STL. For complex models with numerous triangles, STL files can become quite large, potentially impacting storage requirements and transmission times.

There are other formats for 3d printing objects. AMF (Additive Manufacturing File Format) offers a more comprehensive approach, encompassing not only the surface geometry but also color, texture, and other relevant information for 3D printing. 3MF (3D Manufacturing Format) is similar to AMF, but provides a more feature-rich alternative to STL, supporting advanced functionalities like lattice structures and build instructions. Some vendors have support for these other formats in their software, like BambuStudio and Lychee - but they all eventually render (or slice) the STL into a set of instructions that are sent to printers, often as a .gcode file. That works similar to how the old programming language Logo works, sending the printer head zipping across an x and y axis for each layer to print, feeding filament, and then moving the printer up on the z axis as each layer is finished. Think of a tool like AutoCAD or Blender as something like Microsoft Word, where instead of making a document, a designer makes an object.

Part 3: From Physical to Virtual

That’s been a lot on the history of how some of these 3d formats came to be. Thus far the article has focused on creating images that would be used to display images on screens, print on paper (with significant contributions from Xerox PARC and then the team at Adobe), or even on a 3d printer. There were tons of other contibutions when it came to getting images into computers. Russell Kirsch and his team at the National Bureau of Standards (NBS) built the first image scanner specifically designed for use with a computer, made possible in part by Giovanni Caselli, Édouard Belin, and Western Union from 1857 all the way to the modern era.

Steven Sasson developed the first prototype digital camera in 1975. It was 8 pounds and only captured black and white images at 100 by 100 pixels and took 23 seconds to grab an image and save it to a digital cassette tape. It was made possible by the Fairchild CCD image sensor (Fairchild being the company the “traitorous eight” started when they left Shockley Semiconductor to effectively put the word Silicon in Silicon Valley before two of them ran off to start Intel). Here, we see multiple inventions made possible by other advances. A steady evolution. But they all needed standards. Those graphics could be saved as GIF files (developed by Steve Wilhite at CompuServe in 1987), JPEG (developed by a committee of experts from ISO in 1986), and PNG (developed by Thomas Boutell and the PNG Development Group in 1996).

Along the way, plenty of companies started looking at, and developing tools, to 3d scan physical objects to create STLs. Early efforts used still images and tried to piece them together using a mesh of images that are processed using various machine learning techniques.

By the 2020s, it was possible to capture images with a digital camera, create manual images, and print them. It was also possible to create 3d files and print them or import them into popular game engines, like Unity. Unity supports FBX (Film Box) for importing 3D models with embedded animations, OBJ for importing 3D models (typically requires separate animation files (like FBX or Alembic to define the animation data), DAE (Collada), an open-source format that can handle 3D models, animations, materials, and other elements and GLTF (GL Transmission Format), a newer format that efficiently compresses and is well-suited for real-time rendering applications like Unity. Further, animation files can be imported from Motion Builder (*.mbc), FBX, or BVH. Other game engines, like CryEngine usually support FBX for the 3d models, but also take CAF, FSQ, ANM, and CGA. Notice that instead of one file type for most 3d printing needs or 3 for most image disply needs, this list is exploding and only includes two out of dozens of available game engines. The lack of core standards is a killer.

The Meta Quest supports OBJ, FBX, and GLTF outputs natively. It can play VR videos in .mp4 or for panoramic videos, MOV and F4V. It natively uses the equirectangular video format, a spherical projection that fills a rectangular frame. The Quest supports limited options for capturing video and objects, but tools like Adobe Premier Pro, Autodesk ReCap, and Meshroom are available on their app store and do a far better job. Whatever software is being used, the quality of the 3D reconstruction highly depends on the quality of the original equirectangular video, lighting conditions, and the capabilities of the processing software.3D scanners use various technologies to capture the 3D geometry of an object, essentially creating a digital representation of its shape and dimensions. This begins with data acquisition, then moves to various scanning techniques, and ultimately data processing and recondstruction.

The data acquisition comes in two forms, acive and passive scanning. Active scanning leverages the scanner to actively project a specific signal, such as a laser beam or structured light, onto the object's surface. The reflected signal is then measured to determine the object's distance from the scanner (using the time it takes for a pulse to bounce back to the scanner). Passive scanning involves using the scanner to capture the existing ambient light or radiation reflected from the object's surface. This method is less common but can be used for specific applications. Structured light scanning inolves a projector that casts a known pattern of light (stripes, grids, etc.) onto the object and then uses cameras to capture how the pattern deforms as it hits the object's surface. The distortion of the pattern then allows the scanner to calculate the 3D shape.

Once captured, the scanner software takes the collected distance or light pattern data points and translates them into a 3D point cloud. A point cloud is a collection of data points in space, each representing a specific location on the object's surface. Additional processing might be required to convert the point cloud into a more usable format, such as a 3D mesh (that STL as a collection of interconnected triangles) suitable for further editing or 3D printing. The most common scanners are handheld scanners, which offer portability and are suitable for capturing small to medium-sized objects., structured light scanners, which often used for capturing complex shapes with intricate details, and industrial scanners, which provide high accuracy and speed for large-scale applications. The scanners then typically plug into a computer and the 3D scanner software itself processes the captured data and converts it into usable formats. In the below video, there's a stand that rotates a model I printed and painted in order to generate a point cloud from a number of different angles.

Imagine the 3D scanner is like a camera that instead of capturing color information, it measures the distance or light patterns at various points on the object's surface. This information is then used to build a digital representation of the object's 3D shape. That includes machine learning algorithms to extract key features from photos or images, such as corners, edges, and textures as well as Point Cloud Processing, where LiDAR data is denoised (removing noise) and filtered to prepare it for further processing.

The machine learning usually also does feature matching, or identifies corresponding features across different images. This establishes relationships between the 2D information in the photos and the 3D points in the LiDAR data (if available). Based on the matched features and potentially the LiDAR point cloud, advanced algorithms estimate the 3D structure of the object. This can involve techniques like Multi-View Stereo (MVS), which analyzes corresponding features in multiple images to reconstruct the 3D shape and Depth Map Estimation, which predicts the depth information for each pixel in the images, which can then be used to create a 3D model. The reconstructed 3D information is converted into a mesh, which is a collection of interconnected triangles representing the object's surface.

Part 4: Beyond Stitching Photos

A number of machine learning techniques are then used in the processing. THis includes deep learning, which uses Convolutional Neural Networks (CNNs) for image feature extraction and depth map estimation. They can learn complex patterns from large datasets of images and LiDAR scans, leading to more accurate 3D reconstructions. Additionally, many of the software tools to combine point clouds use Structure from Motion (SfM), a fundamental technique in photogrammetry that estimates the camera poses (position and orientation) for each image and uses this information to reconstruct the 3D structure.

The modern techniques are to use photos and LiDAR together, which provides a number of advantages. Photos provide rich texture information, while LiDAR offers accurate depth data. Combining them leads to more robust and detailed 3D models. Photos struggle in low-texture and low light areas, while LiDAR can be susceptible to noise. Combining them mitigates these limitations, provided that the machine learning models can properly map point clouds and apply that richer texture information. For the most part, this has been a failure for downmarket tools. Turns out that accurately matching features across multiple images, especially in challenging scenarios like repetitive textures or occlusions, remains an active area of research, and one that will only progress with better data science and processor capabilities. Processing large datasets of images and LiDAR scans can be computationally expensive.

Yet, my hobby of 3d printing random things isn’t the only use for this type of technology. I also scan and print things that break around the house. Like the part that broke on my vaccuum. I could have bought a new vaccuum, but I printed a part in about 6 minutes based on the ability to glue what broke, scan it, and then print a replacement. Creating detailed 3D models also works in cultural heritage preservation, product design, and augmented and virtual reality applications. It’s also used in autonomous vehicles to create 3D reconstructions of environments for self-driving cars. Robots can also leverage 3D scans for tasks like object manipulation and navigation in complex environments.

Most people know how cameras work. Unlike film cameras that capture light on a chemical film, digital cameras use an electronic process to record images. Here's a simplified breakdown: Light enters the camera through the lens, which focuses it onto the image sensor, much as it has since that Fairchild sensor mentioned earlier. The image sensor is typically a CCD or CMOS chip and contains millions of tiny light-sensitive pixels. Each pixel records the light it receives, converting it into an electrical signal. Then the camera's internal processing unit (similar to a mini computer) takes over for signal processing. It amplifies the weak electrical signals from each pixel and then converts the analog signals from the sensor into digital data (ones and zeros). Factors like exposure time and ISO settings influence this conversion.

Most sensors capture only grayscale information. A Bayer filter or similar color filter array placed over the sensor helps capture color data. By analyzing the light hitting different color filters on each pixel, the camera recreates the full color image. The processed digital data is compressed and saved onto a memory card in a format like PNG, JPEG, or RAW. The image is then viewed on the camera's screen (like the screen of an iPhone) or transfered to a computer.

LIDAR, which stands for Light Detection and Ranging, is a remote sensing method that uses light pulses to measure distance. It essentially acts like a radar, but instead of radio waves, it uses pulsed laser beams emitted towards the target object or area. These laser pulses travel outward and eventually reflect off the surface they encounter. The reflected light is then captured by a sensor in the LIDAR system. By measuring the time it takes for the laser pulse to travel from the source to the target and back, the LIDAR system can calculate the distance to the object. The speed of light is a known constant, so the time difference between sending the pulse and receiving the reflection directly translates to the distance traveled.

LIDAR systems often employ scanning mechanisms, such as rotating mirrors or oscillating beams, to direct the laser pulses in various directions. By capturing the distance information from multiple points, LIDAR can build the 3D point cloud mentioned earlier. This point cloud represents a collection of data points in space, each containing the X, Y, and Z coordinates (representing the 3D location) of the reflected light. This is the outside, or boundaries of an object. This is useful for mappting and surveying, as well as some of the other uses mentioned earlier, especially when using LiDAR that can perform ground penetrating operations, like to find new archaeological sweetness.

The LiDAR and camera combo punch has allowed for large industrial uses. But the tech has gone downmarket. A quick search on Amazon nets hundreds of 3d scanners ranging in price from a few hundred dollars to thousands. I’ve tested dozens, thanks to Amazon’s generous return policy. I have yet to find one that was great. Most require a dozen scans that get merged, point clouds lined up, and maybe the resolution is passable. Even if it lists a .02mm precision. Further, many of the tools actually use the same software, which kinda’ sucks and crashes more than it should (which is at all tbh). As you can see in the below image, the results are often lackluster.

I had actually all but given up on scanning real life objects.

Part 5: Apple Enters The Fray

Well, at least, that’s where I was until Apple introduced Reality Composer for iPhone 15 Pro a few weeks ago. Apple has, yet again, restored my faith in technology. Before we talk about Reality Composer, let’s talk about Reality Converter. This was the first place that my experiences from all these years really started to click and I realized that when it comes to augmented reality, the 3d printing community had actually been augmenting more than the gaming community, where models stayed in a virtual reality environment - but the power of a device like a Vision Pro is way, way more than virtual reality. Heck, even Jaron Lanier, who wrote the Virtual Reality Language (VRL) in the 1980s, talked about virtual reality like an escapist drug, rather than a way to make lives better (he was, in fact, concerned it would be outlawed like 'shrooms recently were when he did his groundbreaking VR work). Many in the 3d printing world just print little fidgets, or miniatures for tabletop gaming, but they can do so much when it comes to augmenting our physical reality - not just augmenting a veneer over the top of our physical reality.

One of the things that I’d built was a collection of ancient coins. This was to 3d print and then photograph images for a book I was writing. Those are posted as .stl files on Thingiverse (https://www.thingiverse.com/krypted/collections/37539515/things). Turns out, this Reality Converter could convert the OBJ files, which is another file format option available for printing. I wanted to convert lots, so I built a little script to help automate that process using Apple’s RealityKit, which is a pretty rad and well thought out body of work on Apple’s part. That’s available at https://github.com/krypted/3dconverter.

Reality Converter is currently in beta and available for download at https://developer.apple.com/augmented-reality/tools/. It looks simple enough once installed and opened.

The cool thing about Reality Converter is that it’s more than just a conversion tool. In the following example, we import a tile of swamp terrain that’s in a .obj file.

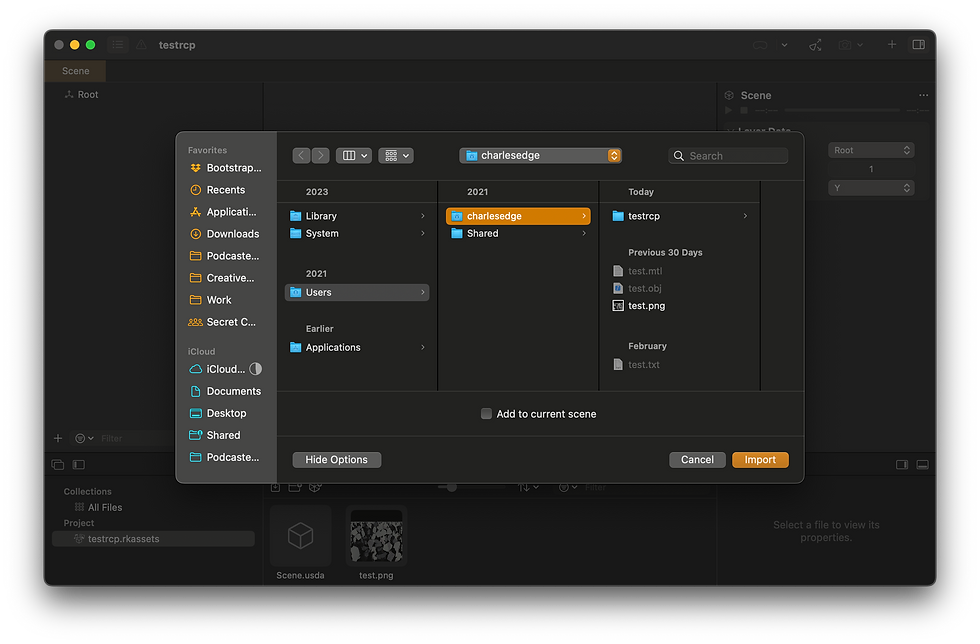

It’s also possible to apply new materials for each aspect of the image, as seen in Base Color, Emissive, Metallic, Roughness, Normal, Occlusion, Opacity, Clearcoat, and Clearcoat Rough. But as I later learned, once using Reality Composer Pro, this isn’t necessary as those can be dynamically applied in a scene. More on that later. To import files, use the import option, but keep in mind (as seen in the below screenshot) that these need to be jpegs. More on at least one way to get the texture map later in this article.

Now there’s an object that’s been 3d printed, but also exists in this AR space. Or at least, can be once an app is built to wrap around that. Select File-> Export, and there’s an option to dump the usdz (more on usdz in a bit). This is great if you don’t still have the original project an object was created with. But, there’s actually a better way.

Blender, and plenty of other tools used to generate 3d graphics natively supports universal scenes in the form of a USD archive (where USDZ is Apple’s version of an archive). So here’s a Grace Hopper I made once upon a time: https://www.thingiverse.com/thing:5399776/files - download that, open it in Blender, and use the File menu to Export. There, use usd*.

The resultant objects exported include the .usd as well as a pack of texture files.

Now, we’ve taken a virtual object that never existed in the reals and brought it into a format that it can be imported into an AR environment. Let’s open Xcode and use Reality Composer Pro (available under the Xcode menu and then Open Developer Tool (which btw should be plural imho) and then click on Reality Composer Pro. From Reality Composer Pro, drag the objects into the scene.

It’s possible not only to apply the same types of materials, but there’s a symbolic view. This is useful when working with the APIs in SwiftUI later. For example, change the texture on a dice. Once created, you’ll see the asset in the sidebar of Xcode, like with this standard six-sided dice.

Don’t have Xcode, it’s also possible to use Reality Composer (not Pro) with a quick download off the App Store at

https://apps.apple.com/us/app/reality-composer/id1462358802. This is actually a much simpler tool, with a visual and user-friendly interface that can easily be taught to non-developers. It allows users to directly drag and drop virtual objects and manipulate them within the AR scene. Reality Composer also has a library of pre-built 3D objects, materials, and animations that users can readily incorporate into their AR scenes. Users can also import their own 3D models created in external software (supporting formats like USDZ and OBJ). Objects within the scene can be assigned various behaviors using a visual scripting system. This allows users to define how objects interact with the real world, respond to user input (taps, gestures), and trigger animations or sound effects.

Reality Composer, Reality Composer Pro, and Reality Converter (and my dinky script) leverage Apple's AR framework, RealityKit, to render the 3D objects and animations within the AR experience. This ensures smooth integration and compatibility with Apple devices and stores all these objects into a nice little packed bundle. Basic physics simulations can be implemented to add a layer of realism to the AR experience. Once the AR experience is designed, Reality Composer allows users to export it as a Reality File (.reality) for further development in Xcode. It can also be published as an AR Quick Look experience, shareable with others in Safari, Messages, or Mail. AR Quick Look experiences don't require a separate app and can be viewed on compatible Apple devices (and we've long been able to use Xcode and Quick Look to view STL files).

Think of Reality Composer as a visual programming tool specifically designed for building AR experiences (when not shrooming, or maybe when shrooming, as Jaron Lanier did something similar with his VRL in the 80s). For highly interactive and custom-tailored AR applications, developers will use Xcode and RealityKit directly for more advanced programming on the specific objects - for example, dynamically swapping out elements and materials. Apple gives a good primer for getting started with it at https://developer.apple.com/documentation/visionos/designing-realitykit-content-with-reality-composer-pro#Build-materials-in-Shader-Graph

As mentioned, the USD format (and variants of packed objects) is the standard that makes so much of this stuff interoperable. We can thank Pixar for that, since they open sourced it as OpenUSD in 2016. If it’s great for all those cool Pixar and Disney Animation movies then it’s great for us. Apple's USDZ and USDA formats are related but distinct ways to store 3D information in ways that Xcode can easily interpret (er, import objects from), given a standard json representation of a number of different data objects.

USDZ (Universal Scene Description Zip) is a binary package. Inside, it contains a main USD file (USDC) and any textures or other resources the 3D model needs. USDZ files are specifically designed to work with Apple's AR features like AR Quick Look and RealityKit. They can include extra information beyond the 3D model itself, such as how to anchor the object in the real world or how it should react to physics.

There are a few other formats, as well. USDA (USD ASCII) is a text-based version of the USD format. It's human-readable, but not meant to be edited directly (it can get complex). Developers can use USDA files for debugging or as an intermediate step when processing USDZ files. A USDC file is a binary format of USD. USDC stands for "Universal Scene Description Compressed." It's a binary version of the main USD file found within a USDZ package. Compared to the text-based USDA format, USDC files are much smaller and load significantly faster. This is essential for smooth performance in AR applications where quick rendering is necessary. The USDC file within a USDZ package holds the core 3D scene description, including the geometry, materials, and other properties of the 3D object. USDC files can also be converted back to the readable USDA format using specific software tools.

Reality Composer on iPhone is a special kind of app. One of my favorites, given that I’ve been trying to do what it does for years. The iPhone 15 Pro out-performs far more expensive devices to capture a physical object. Take this USDZ of a giant I printed from an STL and then painted. This is by far the best 3d scan I’ve ever seen. Using https://products.aspose.app/3d/conversion/usdz-to-obj it’s then possible to see how the elements are extracted in a conversion to stl and to obj.

It’s also possible to see the raw stl or obj with no color in quicklook as well as the texture file, which gets overlayed. Again, the texture comes from the camera, and the reason the iPhone 15 Pro is used rather than just an iPhone is that you can think of the LiDAR camera as producing the actual STL (to oversimplify an analogy).

Part 6: In Code

Now that there are these obj and usdz files, it’s time to put them into code. It would be easy to go down a rabbit hole and write a whole book about swift (which I did and it’s currently being edited by my publisher and will hopefully be out soon). But it’s also possible to just focus on the elements necessary to manipulate these 3d objects in an Augmented Reality view.

Let’s take the coins mentioned earlier and display a grid of them that just allowed a user to spin them and look at them. First we’ll import and then setup the objects:

import SwiftUI

enum AppData: CaseIterable {

case coins, dices

var title: String {

switch self {

case .coins:

return "Coins"

case .dices:

return "Dice"

}

}

var arrList: [ListType] {

switch self {

case .coins:

return [ListType(id: "immersiveView", image: "app.gold", immersiveViewType: "tigerCoin"),

ListType(id: "immersiveView", image: "Charlemagne", immersiveViewType: "Charlemagne"),

ListType(id: "immersiveView", image: "caesar sm ", immersiveViewType: "Caesar"),

ListType(id: "immersiveView", image: "byzantine", immersiveViewType: "Byzantine"),

ListType(id: "immersiveView", image: "Chinesecoins", immersiveViewType: "Chinesecoins"),

ListType(id: "immersiveView", image: "colosseo", immersiveViewType: "colosseo"),

ListType(id: "immersiveView", image: "Daric", immersiveViewType: "Daric")]

case .dices:

return [ListType(id: "immersiveViewDice", image: "Dice", immersiveViewType: "Dice")]

}

}

}

struct ListType: Identifiable, Hashable {

var id: String

var image: String

var immersiveViewType: String

}Then it’s possible to lay the coins out in SwiftUI, so a user can select one of them to flip:

import SwiftUI

import RealityKit

import RealityKitContent

struct CoinListView: View {

@State private var angle: CGFloat = 0

var body: some View {

TabView {

ForEach(0...19, id: \.self) { item in

VStack {

Model3D(named: "Toss", bundle: realityKitContentBundle)

.rotation3DEffect(.degrees(-90), axis: .x)

.scaleEffect(x: 0.5, y: 0.5, z: 0.5)

.rotation3DEffect(.degrees(angle), axis: .y)

.frame(width: 250)

.onAppear {

angle = 0

let timer = Timer.scheduledTimer(withTimeInterval: 3, repeats: true) { timer in

withAnimation(.linear(duration: 3).repeatForever(autoreverses: false)) {

angle += 270

}

}

timer.fire()

}

.padding(.bottom, 150)

Text("Toss")

.font(.system(size: 30, weight: .bold))

}

.frame(width: 350, height: 500)

}

}

.tabViewStyle(.page(indexDisplayMode: .never))

}

}

#Preview {

CoinListView()

}Then, start flipping and rolling dice, using gestures to pick them up, adding in some randomization to make things feel a bit more… realistic for lack of a better word:

import SwiftUI

import RealityKit

import RealityKitContent

struct ImmersiveView: View {

@Environment (\.dismissWindow) var dismissWindow

@State private var rotation: Angle = .zero

@State private var offSet: CGFloat = -100

@State private var isCoinFlipping = false

@State private var perFlip : Double = 0.1

var body: some View {

RealityView{ content in

if let scene = try? await Entity(named: UserDefaults.immersiveView, in: realityKitContentBundle) {

content.add(scene)

}

}

.gesture(TapGesture(count: 1).targetedToAnyEntity().onEnded({ value in

let totalFlip : Double = [40,41].randomElement() ?? 39

perFlip = 0.1

let degreeRotationPerFlip : Double = -540 //-180 is laggy in simulator

var didflipCount : Int = 0

let travelPerFlip : Double = 200

let willTotalTravel : Double = travelPerFlip * totalFlip

var didTotalTravel : Double = 0.0

let halfWay = willTotalTravel / 2

rotation.degrees = 0.0

offSet = -100

isCoinFlipping = true

let timer = Timer.scheduledTimer(withTimeInterval: perFlip, repeats: true) { timer in

withAnimation(.smooth(duration: (perFlip))) {

didflipCount = didflipCount + 1

didTotalTravel = didTotalTravel + travelPerFlip

if didTotalTravel <= halfWay {

rotation.degrees -= -degreeRotationPerFlip

offSet -= (((travelPerFlip) + (Int(totalFlip) % 2 == 0 ? 0 : (travelPerFlip / ((totalFlip - 1) / 2)))))

} else {

rotation.degrees -= -degreeRotationPerFlip

offSet += (travelPerFlip)

}

}

if rotation.degrees <= Double(degreeRotationPerFlip*totalFlip) {

timer.invalidate()

print(didflipCount)

isCoinFlipping = false

}

}

timer.fire()

}))

.rotation3DEffect(rotation, axis: .z, anchor: .center)

.offset(y: offSet)

.disabled(isCoinFlipping)

.onAppear {

dismissWindow(id:"main")

}

}

}

#Preview {

ImmersiveView()

.previewLayout(.sizeThatFits)

}It’s worth mentioning that these objects rise and fall in real, physical space. The views are layered on top of the display.

Now, let’s take some of these concepts and look at doing even more (thanks to the vooooondervul Joel Rennich for his amazing work in this), and look at what’s then possible, like a virtual tabletop for gaming!

Meanwhile, and no shade intended here, there are a lot of apps available for Vision Pro that are just a re-compiled version of an iPad app. Here, there’s a 2d app for looking at furniture.

But it could sooooo cool (and what I wanted) if it was a real 3d app with some of this code to build better, immersive views, rather than layering objects on 2d maps! In other words, rather than look like King’s Quest, let’s get real physics involved, without having to understand the mathmatical concepts behind Hilbert spaces (although having a brain like his or Von Neumann’s never hurts).

One final note is that while assets within apps are true 3d objects, metadata assets like AppIcons use technology similar to what Kings Quest used all those years ago, where you upload a front, middle, and back image, and it parallaxes between them as objects are scrolled through. Cool, but an illusion of sorts - and given that we don't want to slow down the home screen, a really solid tradeoff for potentially expensive processes and a look that is native to the device but familiar enough for developers that they can often just recompile their iPad app, duplicate the files in the iconset and plop them in there.

We now have the ability to print, paint, scan, and then print again (maybe even in color since the Bambu can do that). We can create net-new objects and print them. AI can even help with that. There are lots of junky tools out there. But by not building yet another, Apple has paved the way for real developers to build real tools to help people do more, and in an immersive experience that still includes the real world - which means devices like the Vision Pro can be as much for productivity as for fun. Imagine capturing a spatial video (which seems beyond the scope of this little article) of a crime scene, having an overlay for an airplane engine to help with diagnostics (rather than the cute minis we put on a table, print or CNC the defective part), or being able to truly see what that chair in that app will look like in the living room before buying it - in real 3d. Maybe the "singularity" isn't Skynet (that whole dystopian view of AI came from, and is usually symbolic of, nuclear war). Maybe the "singularity" is the blending of physical and virtual realities so we humans can get more done. Because computing devices have always been about productivity. Now it's more real than ever.

We are working on making the SwiftUI more responsive, and a better experience in Secret Chest. We probably won't build any fancy scenes or animations. They'd just get in the way of being productive. But that's not the kinda' app we build. Having said that, we had a blast doing a little R&D around how to improve experiences! If you have a Vision Pro and want to take Secret Chest for a spin (or if you don't), sign up for our private beta on the main page! Or check out the troubleshooting guide we did for Vision Pro, the aritcle we did on Vision Pro as a Symbiosis device, or this one on more about VR and AR history and what we're thinking about for Secret Chest.

Comments