Apple's Vision Pro is the latest in a long, long line of making new technology usable for the masses. It’s in Apple’s DNA - from graphical interfaces to the mouse to the multimedia revolution to mobile computing that didn’t suck. Vision Pro opens doors to a world of immersive “spatial computing” experiences that just feels modern. And yet, the beginnings of this spatial computing have origins, like much of modern technology, in Cold War deep research. As with a lot of technology we use today, the early ideas emerged from science fiction.

In 1935, Stanley Weinbaum's "Pygmalion's Spectacles" laid the groundwork for the concept of virtual reality we know today. The story follows Dan Burke, who encounters Professor Ludwig, a peculiar inventor with a pair of revolutionary spectacles. These glasses transport Dan not just visually, but fully, into a fantastical virtual world crafted by Ludwig. While exploring this immersive realm, Dan meets and falls in love with a woman named Lorraine, leading him to question the very nature of reality. Notably, the story presciently describes features essential to modern VR, including sensory stimulation, interactive environments, and user-centric narratives. Though written decades before technology caught up, "Pygmalion's Spectacles" remains a captivating and innovative vision that continues to inspire developers and dreamers alike.

21 years later In the late 1950s, Morton Heilig dared to dream beyond cinema with his groundbreaking invention, the Sensorama. This wasn't just a movie screen; it was a portal to another reality. Imagine being nestled in a multi-sensory theater, enveloped by a 3D film projected onto a curved screen (curved like monitors in the 2010s). A motorcycle zips down a bustling street, wind shoots through hair, the rumble of the engine vibrates seats, and the scent of hot asphalt fills nostrils. This was the magic of the Sensorama, a pioneer in what might be called proto-Virtual Reality today, that employed film, smells, wind, and vibration to create an immersive experience far ahead of its time. Though limited in scope and complexity, the Sensorama stands as a testament to Heilig's visionary spirit and a crucial stepping stone on the path toward the multi-sensory virtual worlds we explore today. The technology needed to be more personal, though - immersive for each individual.

The first headset for immersive technologies was the Sword of Damocles, a project by the great graphical interface pioneer Ivan Sutherland and his student Bob Sproull, who would later become a researcher at Xerox PARC and lead Sun’s R&D arm before they were acquired and rebranded to Oracle Labs. Their work ran about the same time as other projects that were classified. Sutherland, already a pioneer in computer graphics with the first true GUI, Sketchpad, envisioned an "ultimate display" that would immerse users in computer-generated environments. Inspired by the mythical sword hanging threateningly above Damocles' head, he constructed a bulky apparatus consisting of a stereoscopic display that provided a 3D view of the virtual world, magnetic position sensors that tracked the user's head movements, updating the displayed image accordingly, and a computer generating the visuals, housed in a separate room due to its size and processing power limitations.

The entire contraption, suspended from a frame hanging from the ceiling, earned its name due to its intimidating presence. Despite its limitations, the Sword of Damocles marked several firsts:

First head-mounted VR display: It allowed users to move their heads and experience a dynamic virtual environment.

First head tracking in VR: The system tracked head movements and adjusted the visuals accordingly, creating a basic sense of presence.

Early exploration of 3D interaction: Users could interact with simple wireframe objects using a light pen, marking an early step towards VR user interfaces.

While cumbersome and impractical for widespread adoption, the Sword of Damocles laid the groundwork for future Virtual Reality advancements and inspired researchers who paved the way for more portable and user-friendly head mounted displays that would eventually lead to the VR we know today. Wait, we’re getting ahead of ourselves. Apple markets technology as spacial computing or augmented reality, while much of the rest of the industry refers to it as virtual reality. The terms virtual reality (VR), augmented reality (AR), and spatial computing are often used interchangeably, but they have distinct features:

Virtual Reality (VR):

Completely immerses users in a computer-generated environment: Imagine wearing a headset that replaces your entire field of view with a digital world. You can move around within this world, interact with objects, and feel a sense of "being there.” For example, when strapping on a Vuzix or Quest, users typically have the rest of their surroundings blacked out. Don’t try and walk around or you might get hurt.

Focuses on creating a convincing illusion of reality: VR uses high-resolution displays, 3D graphics, sound effects, and sometimes even haptics (touch feedback) to trick your senses into believing you're in a different place.

Examples: Gaming headsets, VR training simulations, virtual tours (e.g. of homes, sometimes captured with 3d cameras or drones).

Augmented Reality (AR):

Overlays digital information onto the real world: Think about seeing virtual objects, text, or instructions superimposed on your physical surroundings through a smart device or headset.

Enhances the real world with digital elements: AR doesn't replace your view entirely, but adds another layer of information. This information can be practical, like navigation instructions, or more playful, like adding virtual characters to your living room.

Examples: Pokémon Go, smart glasses for maintenance workers, AR filters on social media. This is one aspect of the Apple Vision Pro - you can still see your surroundings, which opens up a world of uses, from using CoreML to analyze models of how a part should move (or sound() and getting guided repair suggestions, to grabbing magic balls to the beat of some soundtrack in a game.

Spatial Computing:

Broader term encompassing VR, AR, and other related technologies: It refers to how computers interact with and understand the real world in three dimensions. Where cybernetics was the study of feedback loops, spacial computing puts us into the loop virtually.

Focuses on the interaction between the physical and digital world: Spatial computing encompasses technologies that track spatial information, map real-world environments, and allow users to interact with digital objects in these spaces. In other words, the tracking of the hands to guide the repair process or using a gesture to invoke a change in another physical place, possibly carried out by a robot (technology initially built to try and put nuclear reactors on bombers).

Examples: Gesture-based controls in VR, mixed reality experiences that blend real and virtual elements,holographic displays.

In short:

VR: Creates a new, virtual world.

AR: Adds digital elements to the real world.

Spatial Computing: Encompasses technologies that understand and interact with the real world in 3D.

Think of it as a spectrum: VR is on one end, completely replacing reality, while AR is on the other, simply adding, or augmenting information. Spatial computing sits in the middle, encompassing technologies that interact with the real world in various ways. Publicly available augmented reality research started in the 1970s, at the dawn of (and inspiring) object-oriented programming, with Ivan Sutherland's "Ultimate Display" concept and Alan Kay's pioneering work on wearable computing (and the work of many others, like the team at Digital’s R&D lab, that gave us the first wearable MP3 player).

The concept of a virtual space emerged due to the fact that fourth generation fighter jets had become too unwieldy for pilots. That led the US Air Force to invest in new cybernetic approaches to flying: put helmets on humans that could aid them in shooting down enemy aircraft while sitting in the cockpit of a plane that had 500 computers. Each of those computers had its own output and pilots simply couldn’t manuever and track everything they needed to track. Enter Thomas Furness, now known as the "grandfather of virtual reality" to those who know.

His tenure at the U.S. Air Force in the 1960s and 70s remained classified for decades, shrouding his significant contributions to the early development of VR technology. Fresh out of Duke University with an electrical engineering degree in 1966, Furness joined the Air Force Systems Command at Wright-Patterson Air Force Base. His mission: develop the super cockpit of the future. This helmet-mounted display system provided fighter pilots with a 3D representation of their surroundings, including critical flight information and potential threats. It featured new tracking systems and early forms of voice and hand gesture control, which helped to revolutionize the situational awareness and training for pilots.

He later moved to the private sector, where he explored head-mounted displays for maintenance and repair, virtual simulations for medical training, and even early forms of spatial augmented reality. Much of this work remains shrouded in secrecy, but its impact on the foundation of VR technology is undeniable. While classified at the time, Furness' Air Force research laid the groundwork for future advancements in VR. His innovations paved the way for commercial applications and sparked the public's imagination about the potential of VR technology. In part because Dan Rather ran a story about a head-mounted display that helped pilots right around the time that Star Wars came out. Turns out the display looked a bit more like a Darth Vader helmet than the pedestrian computer Luke used to blow up the Death Star, but while initially reluctant to embrace the new technology, suddenly many fighter pilots were staunch advocates.

After leaving the Air Force in 1989, Furness continued his VR journey at the University of Washington, establishing the Human Interface Technology Lab (HITLab). By then, there were data gloves (like the Nintendo Power Glove, also released in 1989), and shortly thereafter, as with all new technology innovations, a horror movie called Lawnmower Man came out about VR. Vuzix was founded in 1997 to produce head mounted displays, although those early attempts were basically 640x480 displays in goggles. A few of those stops on the path to where we are today included:

1982: The VPL Research Glove enables hand interaction in VR environments.

1985: Jaron Lanier founds VPL Research, a key player in early VR development.

1991: Virtuality, the first VR arcade machine, offers public VR experiences.

1994: Sega VR-1 brings motion simulation to arcades.

Then VR seemed to hits a plateau in the 2000s to 2010s. More money was getting pumped into the internet, with technologies like Google Street View in 2007 offering panoramic views of real-world locations, paving the way for AR integration. Data brought to devices by the ever more pervasive Internet combined with smaller and faster chips as Moore’s Law continued to play out. By 2010, the Oculus Rift prototype emerged, sparking renewed interest in consumer VR. Google released Google Glass in 2012, an early AR smartglass, to mixed reception. But it became big business in 2014, when Facebook acquired Oculus for $2 billion, signaling mainstream investment in VR.

A VR/AR renaissance then began to unfold from the mid-2010s to the present. Google responded to the Oculus acquisition with Google Cardboard in 2015, a low-cost VR platform for smartphones. The Oculus Rift and HTC Vive, high-end PC VR headsets, launched to critical acclaim in 2016. Microsoft unveiled the HoloLens in 2017, a mixed reality (MR) headset blending VR and AR. Over the next few years they became increasingly more accessible on systems like the PlayStation VR. Meta even launched the Quest Pro, a high-end VR headset focused on work and collaboration in 2023. Most CTOs still think of them as toys, but the technology is ready for real work, as tablet computing was when Apple released the iPad.

Apple had seemingly been somewhat quiet on the VR front. They released ARKit in 2019, so developers could create AR experiences on iPhones and iPads. It seemed like technology specifically designed to create experiences like Pokemon Go, not something meant for work. Yet throughout the 2020s, advancements in haptics, eye-tracking, and spatial mapping enhanced realism and interaction in VR/AR. When the Vision Pro started to ship earlier this month, it included several technologies that are underrated. For example, Optic ID.

Optic ID is a revolutionary iris recognition system built into Apple's Vision Pro. It replaces traditional passwords and fingerprint scans with the unique patterns of your iris, offering a secure and convenient way to unlock your device, authorize purchases, and sign in to apps. Unlike facial recognition, Optic ID works even in low light and with sunglasses, and it never leaves your device, ensuring your biometric data remains entirely private. Imagine simply looking at your Vision Pro and seamlessly gaining access – that's the magic of Optic ID, bringing futuristic authentication to your fingertips (or rather, eyes). What’s great is that as a developer, Optic ID is yet another incantation of the same APIs we use to grab keys out of the Secure Enclave, so many apps will require little (if any) work to get them working on the new platform. And yes, that means there’s a Secure Enclave on the device. And a PIN to bypass Optic ID, as there’s a PIN or passcode or password to bypass all of Apple’s biometric authentication mechanisms. The biometric is just a shortcut to a key.

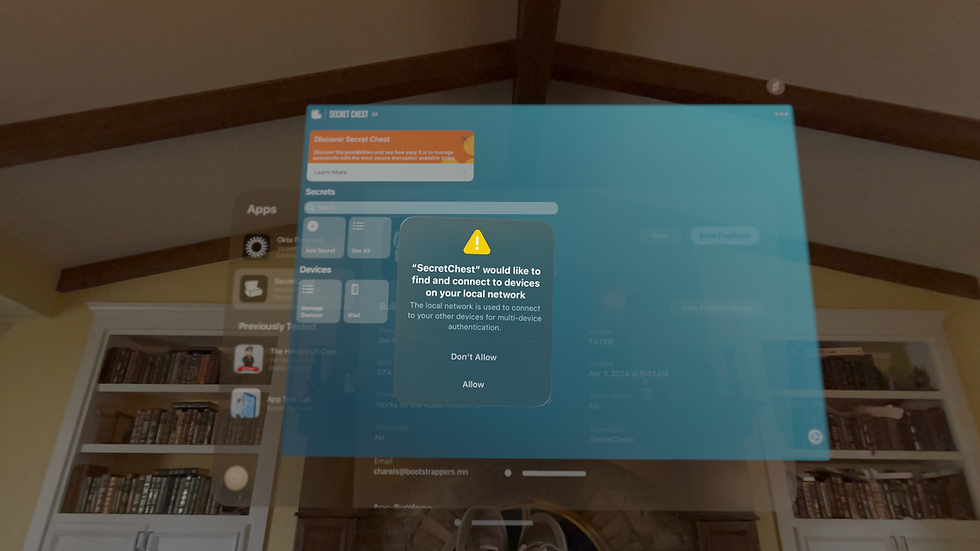

We were able to get Vision Pro as a “target” for Secret Chest to compile to, get access to the Optic ID-backed secure enclave (or to be pedantic, access to the APIs to interact with the Secre Enclave), and render information to the screen from Test Flight within a couple of hours of programming. That doesn’t include any sweet new layouts that take advantage of spatial computing or Apple’s new pinchy-types of interactions/gestures. But a little tinkerating with those told us we didn’t actually need to do much - yet. There were bugs, where we didn’t make our SwiftUI declarative enough here and there but in short, when we used modern coding techniques, a lot of things just worked. The rest just surfaced some tech debt we needed to address sooner rather than later, like this screen where the autofill magic, well, didn't happen at first! Happens, even with pre-release software. Easy enough to fix...

So now let’s talk about how other developers can jump on the Vision Pro train to build the next generation of apps and games. First, the development environment. That starts with Xcode with VisionOS support. So download the latest beta version of Xcode with VisionOS support. This integrated development environment (IDE) provides everything necessary to write code, build, compile, distribute, and test Vision Pro apps (and any other app or adding Vision Pro support to an app). That means an Apple Develop Account will be necessary to access resources, documentation, and the ability to distribute creations through TestFlight or on the App Store. That also gives access to entitlements according to the type of app and tools like Reality Composer for capturing usdz files (more on that in a bit). Finally, a little hardware goes a long way. While the Vision Pro headset isn't yet publicly available everywhere, developers can start developing using the simulator within Xcode.

Next, get comfortable with the core development concepts for Vision Pro. This is beyond learning SwiftUI and how to use the standard frameworks that many have used for things like async await for years. These include:

RealityKit: This framework forms the foundation for building 3D scenes and interacting with the spatial environment. Learn its key components like anchors, entities, and materials.

PolySpatial: This technology enables seamless transitions between real and virtual worlds. Understand how to integrate it into your app for immersive experiences.

World Tracking: Vision Pro apps leverage real-time world tracking to accurately position virtual content in the user's physical space. Grasp the basics of world mapping and spatial understanding.

Apple offers pretty great tutorials and resources to get started, with the best entry point probably being visionOS Learn. This official website provides in-depth guides on various aspects of Vision Pro development,including RealityKit, PolySpatial, and world tracking. https://developer.apple.com/visionos/learn/. There’s already plenty of posts on the Apple Developer Forums as well, where developers can engage in discussions, ask questions, and learn from other developers (including those who write the APIs at Apple). They also have some awesome examples, like how to see if someone is making a heart with their hands. <3

Next, start with a simple project. Start with a basic app that demonstrates core functionalities like displaying 3D objects or interacting with the real world. Let’s start with those 3D objects that can be overlaid onto the real world. These are virtual objects that could seemingly be held in a hand or walked into. For those who have witnessed captivating augmented reality experiences where digital elements seamlessly blend with the real world, these are the AR equivilent of sprites or worlds. It all begins with USDZ.

USDZ stands for Universal Scene Description Zip. It's a compressed file format that encapsulates a 3D scene, complete with models, textures, materials, and even animations, into a single, portable package. The format is based on USD. USDZ utilizes the Universal Scene Description standard, offering a flexible and interoperable way to represent 3D scenes. It’s basically a bundle, compressed for efficiency. The ZIP compression ensures smaller file sizes, making USDZ files easily shareable and downloadable. Unlike other 3D formats, USDZ files require no additional software or apps to be viewed.

USDZ files are versatile. View and capture 3D models directly on an iPhone or iPad that has a LiDAR camera. They can be captured with Reality Composer (a free download on the app store). Once captured, rotate, zoom, and inspect objects as if they were right there, in as high fidelity as the screen can show. Then bring the Digital to Life with AR. Thanks to Apple's ARKit framework, USDZ files can be used to create immersive augmented reality experiences. Imagine placing a virtual gopher on the coffee table or having a 3D model of a dream house come to life in the living room. Further, as with the maker communities at sites like Thingiverse and MakerWorld, artists, designers, and developers can easily share their 3D work in a universally accessible format. This opens up exciting possibilities for online portfolio presentations and product visualization. Unlike traditional .stl or .blender types of formats there’s a bunch of metadata packed in USDZ, like sizes, to make it easier to plug into ARKit.

While originally developed by Apple, the USDZ format is gaining traction within the broader 3D community. Several software tools and online platforms now support creating, converting, and viewing USDZ files, regardless of device or operating system. Apple also has a tool called Reality Converter that can be used to migrate files from .obj to .usdz. There are tons of online converters that can go from .stl to .obj, so there is a veritable cornicopia - or a plethora of objects out there just waiting to be used. Just make sure to understand the licensing restrictions on some of these files if finding stuffs out on maker sites. My .stl files or blender or ztl are always licensed to be permissive for commercial use (https://www.thingiverse.com/krypted/designs), but not all are. And selling an app is… a commercial use.

Experiment and iterate. Don't be afraid to push the boundaries of different features and explore the possibilities of Vision Pro and AR/spatial computing in general. Start with the simulator and, when available, the headset itself to test apps and refine experiments into smooth user experiences. For existing apps, the more modern the code, the easier the transition to add Vision Pro support. But from experience, lemme tell ya’, don’t just test it in the simulator and ship it. Modals might not appear or other artifacts, on these new planes, that look fine in the simulator. Beg, borrow, steal, or bribe someone to run the app on a device and take it through the manual QA to make sure everything works in the real, er, I mean augmented world.

One important aspect of the Vision Pro is that these APIs aren’t all new. Some are. But they’re all well thought out, and developers can do nearly anything they can think of. Looking at past product releases, iPods, iPhones, HomePods, and AirTags either didn’t think of APIs, purposefully didn’t expose functionality, etc. It feels like watchOS had a great extension to build on and that the APIs available for visionOS learned a lot from the massive scaling of options in the watch, iPhone post-3gs era, and iPad. It’s the most complete set of APIs I’ve seen Apple release on day zero for any product. And you can see where there’s lots of little stubs left for more here and there!

Things are moving incredibly fast in this space right now. Apple’s release has prompted others to announce their own new stuff. They all use similar logic (after all, React is declarative and fairly micro-servicey, so while code might not be re-usable, logic is). And the timeline continues to accelerate with innovations in display technology, processing power, and sensor fusion (the feedback loop in a cybernetic culture). Expect even more natural and intuitive interactions: Gesture and voice control, haptic feedback (also employed in those early F-15 cockpits), and brain-computer interfaces (also tested for 4th gen fighter jets) will blur the lines between user and digital world, and not just for military uses. Spatial computing environments are blending real and that of the virtual seamlessly, enabling applications across domains like gaming, education, healthcare, and design.

Further, with Apple in the lead, they have a focus on accessibility, security, and privacy (starting with Optic ID) to ensuring diverse populations and ethical considerations are addressed as this technology moves into more widespread adoption. Those weren’t an issue in the cockpit or with walking bipedal military robots during testing in Vietnam - but more on GEs work there later, as we look at using AR to create and control objects in the real world. But that’s a whole other article. For now, the hope is that looking at the historical context will inspire new and crazy ideas. Be wild. Push boundaries. Move fast, but don’t break things. Create. Evolve. We look forward to seeing it all!

Oh, and a final thought - if the price tag seems steep, the Vision Pro makes a great monitor too. Strap it on with your MacBook right next to it and use it as a screen. ymmv but you won't find a better screen out there! Rather than fire up Xcode and a simulator on 3 different screens, just use Vision Pro and have more real estate than ya' know what to do with!

Commentaires